If you use AI tools or even just read content shaped by them, you’re already part of this story. AI can help you work faster, learn better, and make smarter decisions. But without proper control, the same AI can confuse you, mislead you, or even harm you.

That’s why controlling the output of generative AI systems isn’t about fear or restriction. It’s about protecting you and the people around you.

- Generative AI does not “know” facts. It predicts words based on patterns, not truth or understanding.

- Uncontrolled AI can spread misinformation, bias, and unsafe advice. Confident-sounding errors are hard to detect without safeguards.

- Output control keeps AI accurate, ethical, and trustworthy. It helps limit false claims, hallucinations, and harmful guidance.

- The future of safe AI depends on strong human oversight. Responsible review ensures AI supports people instead of misleading them.

1. AI Sounds Confident Even When It’s Wrong

Generative AI can speak with authority, even when the information is false.

If you’ve ever read an AI answer and thought, “That sounds right,” you’re not alone.

AI writes smoothly and confidently, making mistakes difficult to spot. The problem is that AI doesn’t verify truth; it predicts language. When output isn’t controlled, false information can slip through easily.

This becomes dangerous when you rely on AI for learning or decision-making. You might trust it simply because it sounds sure. Over time, this erodes your ability to judge what’s real.

Tip: Always treat AI answers as a starting point, not the final truth.

2. Misinformation Can Spread Faster Than You Expect

One wrong answer can reach thousands or millions of people.

When AI outputs aren’t controlled, misinformation doesn’t stay small. It spreads quickly through blogs, social media, and search results. You might share something believing it’s accurate, only to later learn it wasn’t.

This is especially risky in areas like health, finance, or public safety. According to the World Health Organisation, inaccurate health information can directly harm people’s well-being.

You deserve information that helps you, not confuses you. It’s frustrating to realiseyou trusted something false, but it’s not your fault.

3. Bias Can Quietly Affect How You’re Treated

AI can reflect human bias and amplify it.

AI learns from existing data. Unfortunately, that data often includes bias. Without output control, AI can repeat stereotypes or unfair assumptions. This can affect hiring, education, or even access to services.

If you’ve ever felt misunderstood or unfairly judged, this can hit close to home. The American Psychological Association notes that biased algorithms can worsen inequality.

Output controls help catch and reduce bias before it reaches you. You deserve systems that treat you fairly, even when powered by machines.

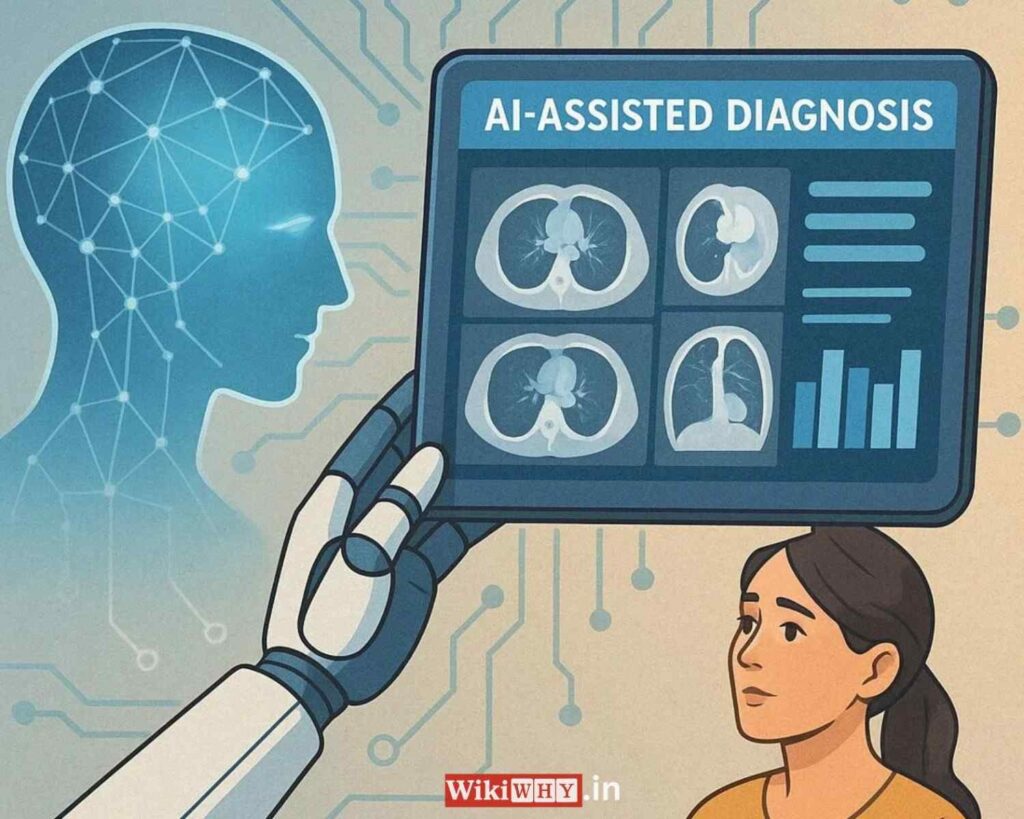

4. Unsafe Medical Advice Can Put You at Risk

Health advice should never be guesswork.

You might turn to AI when you’re worried about symptoms or a diagnosis. That’s understandable. However, uncontrolled AI can provide advice that appears helpful but is medically incorrect or outdated.

The World Medical Association warns that AI should support, not replace, medical professionals.

Strong output controls ensure AI clearly states limits and avoids risky guidance. If something feels serious, trust your instincts and seek real medical care.

5. Legal and Financial Mistakes Can Be Costly

One wrong suggestion can have real consequences.

AI is now used for contracts, taxes, and financial planning. But when outputs aren’t controlled, AI may invent laws, misinterpret rules, or miss crucial details.

You shouldn’t have to double-check everything out of fear. Controlled outputs reduce false certainty and encourage verification, protecting you from costly errors.

It’s okay to use AI for help—but not blind trust.

6. Children and Teens Need Extra Protection

Young minds are especially vulnerable to unchecked AI content.

Kids use AI to learn, explore, and ask questions they may not ask adults. Without safeguards, AI can expose them to harmful ideas or inappropriate advice.

The American Academy of Pediatrics emphasizes careful oversight of AI tools used by children

Output controls help keep learning safe and age-appropriate. Protecting curiosity should never mean risking safety.

7. Trust Is the Heart of AI’s Future

If you don’t trust AI, you won’t use it.

Trust grows when AI behaves predictably and responsibly. It breaks when AI lies, confuses, or harms. Once trust is lost, even helpful tools feel unsafe.

Controlled outputs create consistency. They help AI admit uncertainty and stay within ethical limits. This builds confidence—for you and everyone else.

You shouldn’t have to wonder if technology is working against you.

8. Human Oversight Keeps AI Accountable

AI doesn’t understand consequences—but you do.

Ethics don’t appear automatically. People design them. Output control allows humans to review, guide, and correct AI behaviour. This ensures responsibility when something goes wrong.

The OECD AI Principles stress transparency and accountability. Without oversight, no one knows who is responsible for harm. With it, AI becomes safer for everyone.

Technology works best when humans stay in the loop.

Final Thoughts: Control Isn’t Limitation, It’s Care

You might worry that controlling AI will slow progress. But the opposite is true.

Control makes AI reliable, ethical, and worthy of your trust.

Every system that speaks to you should respect your safety, dignity, and intelligence. That’s why guiding what AI says matters so deeply.

You deserve technology that helps—not harms—you.

Helpful Reminders

- Tip: Treat AI answers as guidance, not authority.

- Tip: Verify important information with trusted sources.

- Tip: Support tools that prioritise safety and transparency.